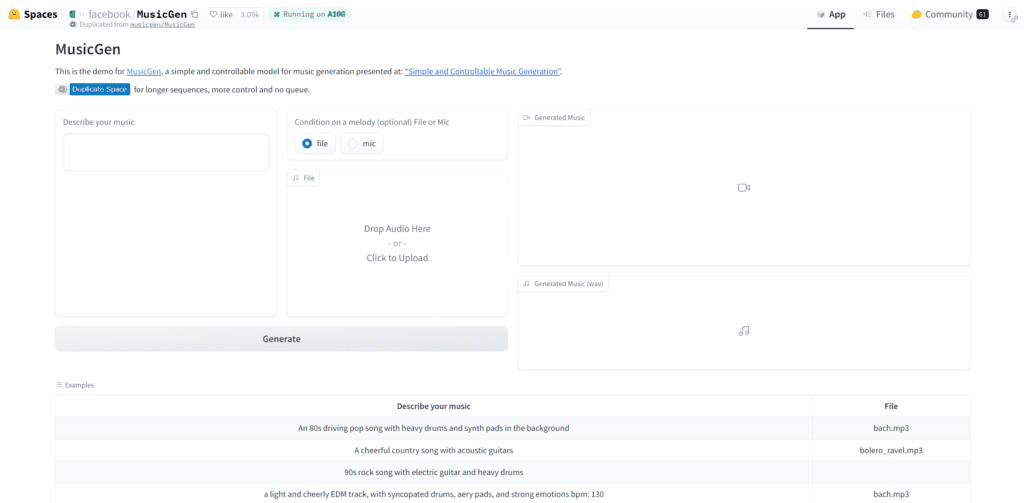

MusicGen stands as a cutting-edge solution for simple and controllable music generation. This single-stage auto-regressive Transformer model is distinctive in its training approach, utilizing a 32kHz EnCodec tokenizer with 4 codebooks sampled at 50 Hz. Key features include:

Key Features:

-

Single-Stage Auto-Regressive Model: Generates all 4 codebooks in one pass for efficient music creation.

-

No Requirement for Self-Supervised Semantic Representation: Unlike existing methods, MusicGen doesn’t require a self-supervised semantic representation.

-

Parallel Codebook Prediction: Introduces a small delay between codebooks, allowing for parallel prediction and reducing auto-regressive steps to 50 per second of audio.

Training Data:

-

20K Hours of Licensed Music: Trained on a vast dataset, including 10K high-quality internal music tracks, ShutterStock, and Pond5 music data.

Use Cases:

-

Efficient Music Generation: Generate music with only 50 auto-regressive steps per second of audio.

-

Controllable and Predictable Output: Achieve control and predictability in music creation with parallel codebook prediction.

-

Diverse Music Styles: Explore a wide range of music styles with the influence of 20K hours of licensed music data.

MusicGen, with its innovative training approach and extensive dataset, brings simplicity and control to music generation. Whether you’re aiming for efficiency, predictability, or diverse musical styles, MusicGen stands ready to transform your music creation experience.

Social media not available for this tool