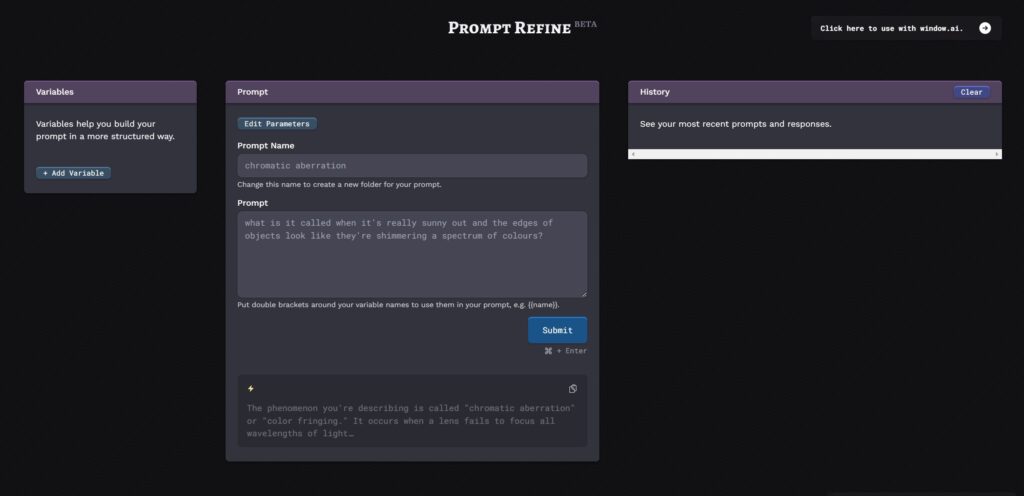

Prompt Refine is an LLM (Language Model) playground that aims to assist users in conducting prompt experiments to improve the performance and effectiveness of their language models. Inspired by the book “Building LLM Applications for Production” by Chip Huyen, this tool provides a user-friendly interface for running and analyzing prompt experiments.

Key Features:

-

Prompt Experimentation: Run prompt experiments to improve the performance of language models.

-

Compatibility: Compatible with various models, including OpenAI, Anthropic, Together, Cohere, and local models.

-

History Tracking: Store experiment runs in the user’s history and compare them with previous runs.

-

Folder Organization: Create folders to organize and manage multiple experiments efficiently.

-

CSV Export: Export experiment runs as CSV files for additional analysis.

Use Cases:

-

Researchers and developers working with language models, seeking to optimize prompt designs.

-

Data scientists and AI practitioners looking to improve the performance of their language models.

-

Individuals interested in exploring and experimenting with different prompt configurations for enhanced model outputs.

Prompt Refine provides a convenient and intuitive playground for conducting prompt experiments and refining language models.

https://twitter.com/marissamary